All published articles of this journal are available on ScienceDirect.

Plant Leaf Disease Detection and Classification Using Segmentation Encoder Techniques

Abstract

Aims

Agriculture is one of the fundamental elements of human civilization. Crops and plant leaves are susceptible to many illnesses when grown for agricultural purposes. There may be less possibility of further harm to the plants if the illnesses are identified and classified accurately and early on.

Background

Plant leaf diseases are typically predicted and classified by farmers tediously and inaccurately. Manual identification of diseases may take more time and may not accurately detect the disease. There could be a major drop in production if crop plants are destroyed due to slow detection and classification of plant illnesses. Radiologists used to segment leaf lesions manually, which takes a lot of time and work.

Objective

It is established that deep learning models are superior to human specialists in the diagnosis of lesions on plant leaves. Here, the “Deep Convolutional Neural Network (DCNN)” based encoder-decoder architecture is suggested for the semantic segmentation of leaf lesions.

Methods

A proposed semantic segmentation model is based on the Dense-Net encoder. The LinkNet-34 segmentation model performance is compared with two other models, SegNet and PSPNet. Additionally, the two encoders, ResNeXt and InceptionV3, have been compared to the performance of DenseNet-121, the encoder used in the LinkNet-34 model. After that, two different optimizers, such as Adam and Adamax, are used to optimize the proposed model.

Results

The DenseNet-121 encoder utilizing Adam optimizer has been outperformed by the LinkNet-34 model, with a dice coefficient of 95% and a Jaccard Index of 93.2% with a validation accuracy of 97.57%.

Conclusion

The detection and classification of leaf disease with deep learning models gives better results in comparison with other models.

1. INTRODUCTION

One of the biggest issues facing precision agriculture in recent years has been the automated diagnosis of plant diseases. Insect, bacterial, and fungal diseases reduce productivity and output in agriculture as well as economic losses [1-3]. Leaf diseases are difficult to categorize due to significant similarities within classes and intricate design changes. Plant diseases may spread more quickly because of climate change. Early detection of diseases on plant leaves is one of the most important factors in guaranteeing high agricultural yield. Agriculture is a significant economic sector in India, contributing around 15.87% of the nation's GDP and 54.15% of jobs [4, 5]. Even though this business has come a long way in the previous few years, crop damage from pests and diseases remains a big concern. Early disease identification on plant leaves is the primary barrier to agricultural productivity. Plant diseases have a detrimental effect on food production and quality and, in many cases, can lead to a full loss of crop [6,7].

Early detection and therapy are difficult since these anomalies are difficult to diagnose. In certain situations, crop losses may be reduced by early discovery and treatment. Furthermore, there is a chance to raise the yield and quality of the final agricultural output. Keeping track of every indication and symptom that an illness produces in real time might be difficult [8, 9]. Erroneous disease identification is always a potential when it comes to subjective analysis. Occasionally, plant pathologists or agronomists may even misidentify the disease, leading to inadequate preventative actions [10].

The terms “Machine Learning (ML)” and “Deep Learning (DL)” have lately gained traction in response to the countless ways artificial intelligence is being used in daily life. In terms of usability, these words enable machines to “learn” many patterns before acting upon them [11, 12]. Applications can improve their prediction accuracy thanks to ML and DL, even if they were not designed with that purpose in mind. The relationship between deep learning and computer vision has led to the development of intelligent algorithms that can analyse and categorize patterns or images more precisely than humans. In the field of agriculture, the application of deep learning-based techniques is beginning to grow.

Deep learning has transformed the field of computer vision and is currently capable of handling a wide range of tasks, including automatic crop lesion identification, maintaining soil fertility, predicting rainfall, predicting crop production, etc [13]. Machine learning techniques have been applied to the identification of crop diseases. However, their scope and research are limited to disease classification of the crop. Furthermore, because farmers are ignorant of and unaware of biotic and abiotic leaf diseases in crops that are invisible to the human eye. Farmers will benefit from early leaf disease detection when treating crops and plants to prevent disease [14]. A plant's physical properties alter with sickness, and this affects the plant's ability to grow. Deep learning methods must thus be applied to ascertain the leaf's morphological characteristics. In addition, using the proposed DL model, plant leaf lesions were found and diagnosed early in this work. The CNN model has been the primary focus of the effort to characterize the morphological qualities of leaves since it will increase lesion identification efficiency and accuracy. The extent and significance of the yield loss resulting from these lesions are acknowledged in a variety of stressful situations.

There are numerous methods for disease detection depending on where the illness is most prevalent in a plant, such as a node, stem, or leaf. In a study [15], the authors offer a novel deep learning method called “Ant Colony Optimization with Convolution Neural Network (ACO-CNN)” for the diagnosis and categorization of diseases. Ant colony optimization was used to examine how well disease identification in plant leaves worked. The CNN classifier is used to remove color, texture, and plant leaf arrangement from the given photos. In research [16], the authors suggested a transfer learning strategy combined with the CNN model known as the Modified “InceptionResNet-V2 (MIR-V2)” to identify diseases in pictures of tomato leaves. With the used models, the disease classification accuracy rate is 98.92%, and the F1 score is 97.94%. In a study [17], the author suggested the “Agriculture Detection (AgriDet)” framework, which uses deep learning networks based on Kohonen and traditional “Inception-Visual Geometry Group Network (INC-VGGN)” to identify plant illnesses and categorize the severity of affected plants. In a research, [18] an author offers the novel “Deeper Lightweight Convolutional Neural Network Architecture (DLMC-Net)” for real-time agricultural applications that can detect plant leaf diseases in a variety of crops. Moreover, to extract deep characteristics, a series of collective blocks is introduced in conjunction with the passage layer.

In a study, [19] an adapted PDICNet model for the identification and categorization of plant leaf diseases is presented in this article. Additionally, to produce optimized and salient characteristics with a reduced size of the MRDOA, the “Modified Red Deer optimization algorithm (MRDOA)” is also implemented as an optimal feature selection algorithm. Likewise, to further improve classification performance, a “Deep Learning Convo- lutional Neural Network (DLCNN)” classifier model is used. In another research [20], the authors use convolution neural network (CNN) techniques, and we also looked at five kinds of potato diseases: Pink Rot, Black Scurf, Common Scab, Black Leg, and Healthy. A database with 5,000 photos of potatoes was employed. We contrasted the outcomes of our approach with alternative approaches for classifying potato defects. Furthermore, to identify foliar diseases in plants, the authors of a study [21] propose utilizing a special hybrid random forest Multiclass SVM (HRF-MCSVM) design. Likewise, to improve computation accuracy, the image features are pre-processed and segmented using Spatial Fuzzy C-Means prior to classification.

In a study, [22] the authors discussed that despite testing numerous algorithms, CNN turned out to be incredibly effective and productive. The suggested model outperforms all other methods by requiring less computing time when tested on the test set, achieving an accuracy of 99.39% with a minimal error rate. Suggested HDL models in a study [23] demonstrated exceptional performance on the “IARI-TomEBD dataset” and achieved a high degree of accuracy in the range of 87.5%. Additionally, PlantVillage-TomEBD and Plant Village-BBLS, two publicly accessible plant disease datasets, were used to validate the suggested methodology. Lastly, the mean rank of HDL models has also been determined using the Friedman statistical test. Out of the three plant disease datasets, “EfNet-B3-ADB” and “EfNet-B3-SGB” had the highest rank, according to the results. In addition, because this study covers 14 different classifications of plant/crop species and 36 diseases, it is a comprehensive way that researchers need to develop and implement a system model. This research paper discusses common imaging techniques for object analysis and classification. Our study attempts to duplicate the algorithm that offers the most precise forecasts for the detection of plant leaf diseases. The results are expected to be utilized to determine the optimal method for creating a smart system that can detect leaf illnesses.

The main contribution in this work is given as follows:

1. The three different encoder segmentation models are proposed for the detection of lesions. The LinkNet-34 shows better detection results in terms of dice coefficient and Jaccard index. The proposed method used LinkNet-34, PSPNet, and SegNet and LinkNet-34 achieved a validation accuracy of 97.57%.

2. The performances of three classification models are then compared, and DenseNet shows better accuracy results with Adam and Adamax optimizer with a learning rate of 0.0001.

3. Using a dataset of 51,806 images representing 36 types of plant leaf diseases to verify the efficacy and precision of the proposed hybrid approach

4. The outcome is assessed using a variety of criteria, including memory, accuracy, and precision.

The rest of the paper is organized as follows: Section 2 describes the methods and materials used, and the proposed model is detailed in Section 3. The result and discussion of our proposed model are discussed in section 4. Finally, section 5 presents the conclusion of future work.

2. MATERIALS AND METHODS

The proposed state-of-the-art hybrid approach is explained, which classifies the lesions on specific plants by using a CNN model trained on various crop leaf pictures.

2.1. Materials

2.1.1. Data Collection

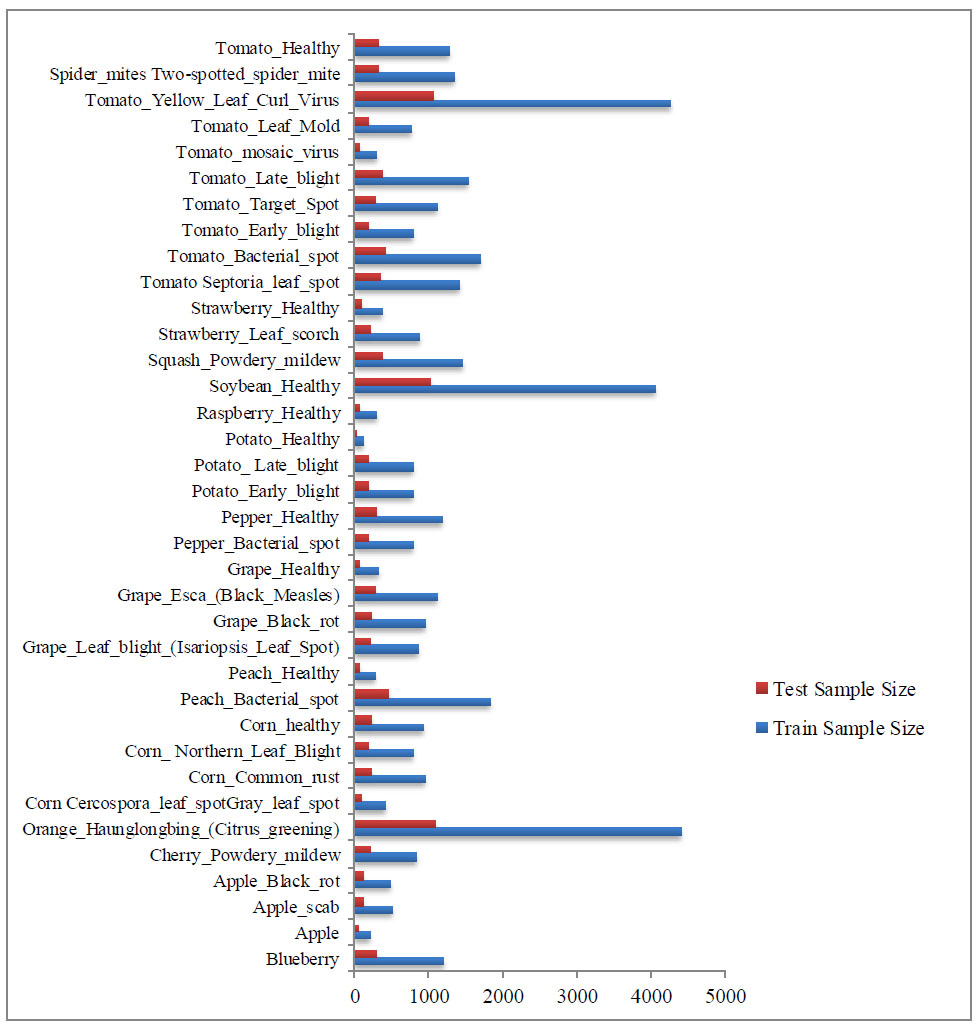

Plant Village [24] provided the plant leaf photos that were utilized to assess the performances (accessed on January 20, 2024). A public dataset called Plant Village Datasets (PlantVillageDataset) includes 54,305 plant leaves—both healthy and diseased—that were gathered in a controlled environment. Fourteen distinct crop kinds are included by the datasets: Apple, bluberry, Grape, Orange Cherry, Potato Peach Raspberry, and Strawberry Soy Squash. Fig. (1) displays a selection of sample images, and Fig. (2) provides an overview of the quantity of picture datasets collected for lesion identification and detection. It also includes the sample sizes for training and testing. The following common stresses cause diseases: water availability, temperature, nutrients, bacteria, viruses, and fungi.

Sample examples of plant leaf images.

Number of plant disease dataset samples.

The data collection technique is essential in real-time operations since inaccurate data in a dataset can affect how an experiment turns out. Therefore, when gathering data, it is important to state and adhere to the common norm.

The 51,806-image dataset is divided into two subsets, with an 80:20 training-to-testing ratio. Thirteen of the thirty-eight classes that make up our data are the healthy classes, whereas the remaining twenty-seven classes show different plant leaf diseases. A collection of RGB images of 256 × 256 pixels depicting leaves make up the information. It is possible to distinguish between healthy and diseased leaves based on their photo classifications. During the photo shoot, care was taken to ensure that every picture included a single centroid leaf. In addition, the lighting and shooting environment remain consistent. After analysing a range of data, it is only natural to have questions about how to use the information efficiently.

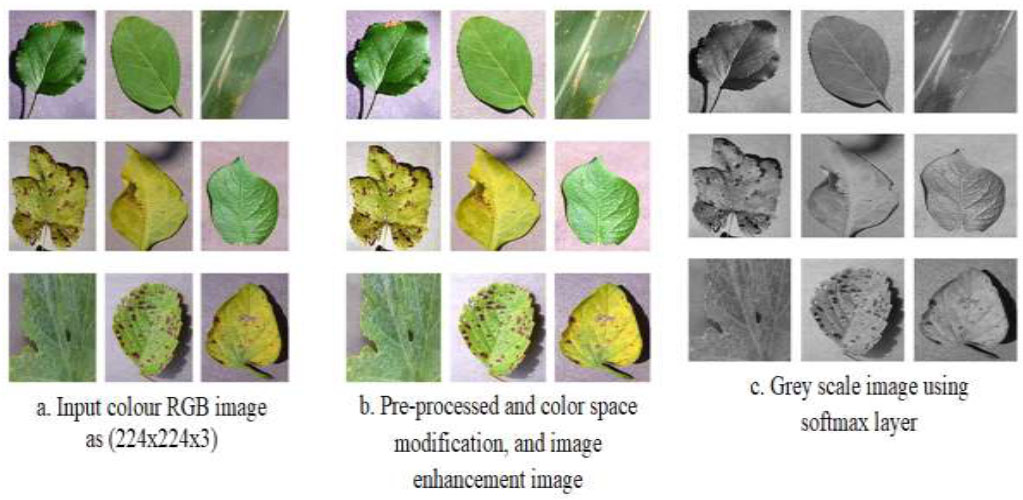

2.1.2. Pre-Processing and Augmentation

It is well known that data gathered from any source can become contaminated by several elements, such as noise and human mistakes. If the algorithm uses such data directly, it can yield erroneous results. Thus, the next step is to pre-process the input data. Pre-processing of data is done to enhance its quality, minimize or remove noise from the original input data, etc. Among the pre-processing methods are noise reduction, scaling, color space modification, and image enhancement. The leaf image in this work is scaled to 224 x 224 x 3, which is then utilized to assess the Hybrid model's performance. Fig. (3) shows the sample of the image after pre-processing.

Following the RGB to BGR conversion, each color component maintains its zero centres about the PlantVillage dataset without scaling. Data augmentation, such as horizontal shift, vertical shift, flips, and zoom, is crucial for data preparation to increase the picture count and reduce overfitting.

2.2. Methods

In this paper, semantic leaf disease segmentation using deep convolutional neural networks and encoder-decoder architecture was applied to a dataset of plant images. The goal was to create a high-density segmentation map of a picture where each pixel is linked to a different kind of object or category. The three different semantic segmentation models used for the detection of lesions are LinkNet-34, PSPNet, and SegNet. The detected lesions are then classified using different classifiers named DenseNet-121 and ResNext-101, which are discussed in the sections below.

2.2.1. Object Detection Models

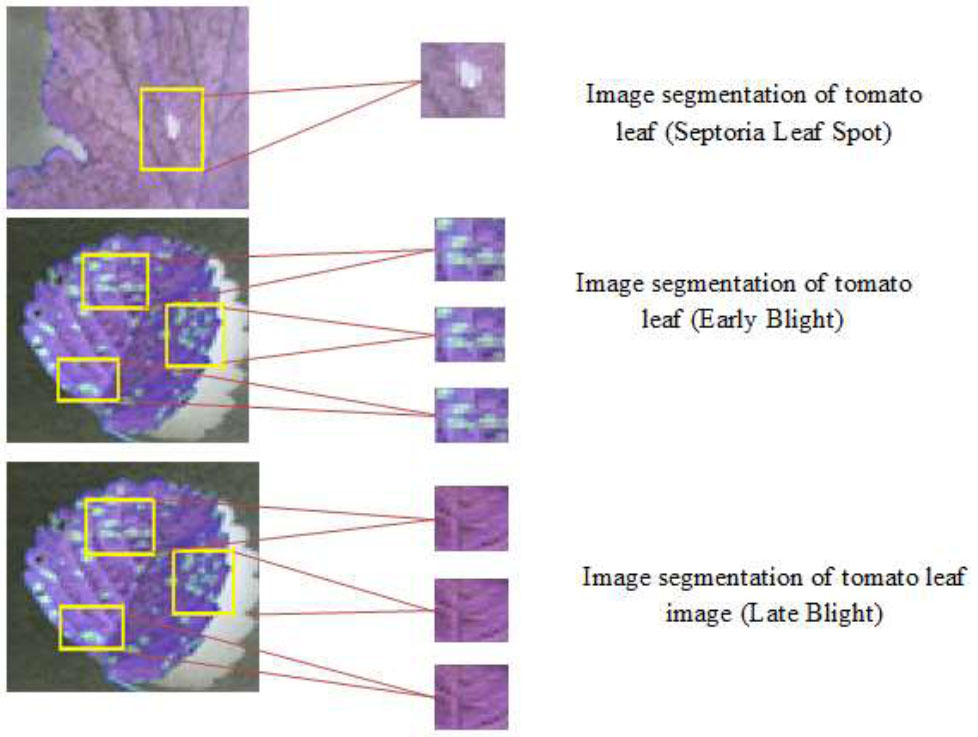

The images of the plant leaves are fed into both semantic segmentation models. The model is simulated using two semantic segmentation models: LinkNet-34 [25], PSPNet [26], and SegNet [27]. This module-based semantic segmentation paradigm makes use of context aggregation based on distinct regions to use global context information. Together, global and local cues enhance the ultimate prognosis. Furthermore, this method is based on the U-Net architecture, which is well known for its efficiency in semantic segmentation tasks. It may divide up a wide variety of items, including things visible in satellite photos and medical imaging. The purpose of the LinkNet-34 design is to improve segmentation model training by integrating decoder and encoder techniques to make the process more efficient and productive. It is made up of a decoder network that up-samples the feature map to produce predictions at the pixel level and a down-sampling network that down-samples the input picture to extract high-level features. Skip connections are used by LinkNet-34 to link the encoder and decoder. Moreover, by leveraging skip connections, which are similar to U-Net, low-level features can be propagated directly to the decoder and combined with high-level information. Fig. (4) shows the object segmentation.

After pre-processing of the image.

Object segmentation using semantic segmentation of the image.

PSPNet excels in challenges involving semantic segmentation. Moreover, by giving each pixel in an input image a semantic label, semantic segmentation attempts to separate the image into areas that correspond to several item categories. PSPNet uses pyramid pooling modules to extract multi-scale context information from various input image regions. This aids in the model's ability to forecast pixels more accurately, particularly for objects of different sizes. PSPNet uses a pyramid structure in which contextual information is captured by applying global pooling at various scales and repeatedly downsampling the input feature map. Pixel-level predictions are then produced by combining and up-sampling the data. PSPNet captures context by global pooling at various pyramid levels, but it does not use the explicit feature fusion step that FPN does. Convolutional layers are used instead for feature combining and up-sampling. PSPNet works well for tasks like fine-grained object recognition, picture segmentation, and scene parsing, where pixel-level segmentation is crucial.

Seg-Net is an encoder-decoder model that has 26 convolutional layers in total. Thirteen Convo layers are present in the VGG16 network in both the contraction and expansion paths. Two fully connected (FC) layers are employed between the encoder and decoder networks. The process of creating a set of feature maps is called “Rectified Linear Unit (ReLU).” This combination is used in downsampling up to a 1024 filter size.

Following each layer is a max-pooling operation with a stride of 2 for the feature map's downsampling. The number of feature channels/filter banks is doubled at the downsampling stage. There is a matching decoder layer for each encoder layer.

The decoder up-samples the input feature map by a factor of two. While the decoder has a multi-channel feature map, the first encoder only has three channels. The multi-dimensional feature map result is then utilized to solve the two-class classification problem by employing the sigmoid function to distinguish the plant pixels from the background. Seg-Net is a semantic-based scene segmentation technique.

2.2.2. Classification Models

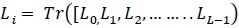

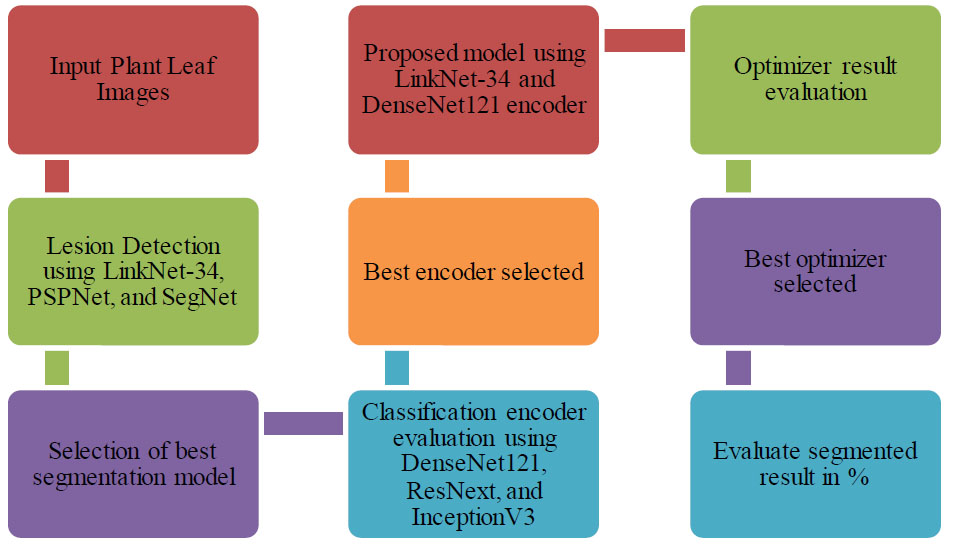

The CNN network DenseNet121 [28] features feed-forward coupling between all its layers. A layer's input is the concatenation of all the feature maps from the previous levels shown in Fig. (3). The N-layer networks are considered as an example. Furthermore, to create n inputs for the network's nth layer, all feature maps from the layers before it is combined. After that, the feature map is sent to the next n inputs for the nth layers. Consequently, all the network's tiers are connected are represented as

. This approach has the added benefit of having fewer network parameters than a traditional convolutional neural network, aside from the fact that redundant feature mappings are not re-learned. Dense connection patterns give gradients from the loss function and the initial input signal instant access, which improves gradient flow and information across the network. This mitigates the effects of vanishing gradient problems that could occur while deeper architectures are being trained. Eq. (1) represents the dense connectedness of the network as the layer n input.

. This approach has the added benefit of having fewer network parameters than a traditional convolutional neural network, aside from the fact that redundant feature mappings are not re-learned. Dense connection patterns give gradients from the loss function and the initial input signal instant access, which improves gradient flow and information across the network. This mitigates the effects of vanishing gradient problems that could occur while deeper architectures are being trained. Eq. (1) represents the dense connectedness of the network as the layer n input.

|

(1) |

where Li is the layer's input, and Tr([L 0, L1, L2,... ... ..LL-1) is made up of feature maps from layers 0 to L-1 and concatenated together. The “Transition Block (TB)” and the “Density Block (DB)” are the two primary construction network varieties. Dense blocks are composed of multiple “Dense Layers (DL)”, one layer 1 x 1 Conv and 3 x 3 Conv layers comprise each DL layer.

The 1 x 1 Convolution layers, 2 x 2 averaging pooling layers, and batch normalization layers that make up transition blocks are arranged in descending order between the dense blocks. DenseNet121, one DenseNet network implementation, includes four dense blocks with six, twelve, twenty-four, and sixteen sequentially organized dense layers in each. Fig. (5) displays the diagrammatical representation of DenseNet121. Aggre- gated Residual Transform Network, or ResNeXt for short, is a CNN design that expands on the ideas of “Inception Networks and Residual Networks (ResNets).” It highlights the notion of cardinality to enhance performance and presents the idea of a divide, transform, and merge block. A divide, transform, and merge block—which applies several transformations within the block—is used in ResNeXt. These transformations aid in the acquisition of various representations and the capture of various abstraction levels. The number of transformation pathways inside the block is specified by the cardinality parameter, which is introduced. It has been demonstrated that raising cardinality improves the model's performance. ResNeXt has shown remarkable performance in several computer vision tasks, such as object detection, image segmentation, and image classification. ResNets, Inception Networks, and cardinality-based transformations are combined to produce ResNeXt, which improves accuracy while preserving computational efficiency. It should be noted that implementations and modifications may change the specifics of ResNeXt's architecture, including the number of layers, cardinality, and block configurations. Inception V3 is the third iteration of Google's Deep Learning Evolutionary Architectures series. Images at a resolution of 299 ×299 pixels are captured by the input layer of the 42-layer Inception V3 architecture, which includes the Softmax function in the last layer.

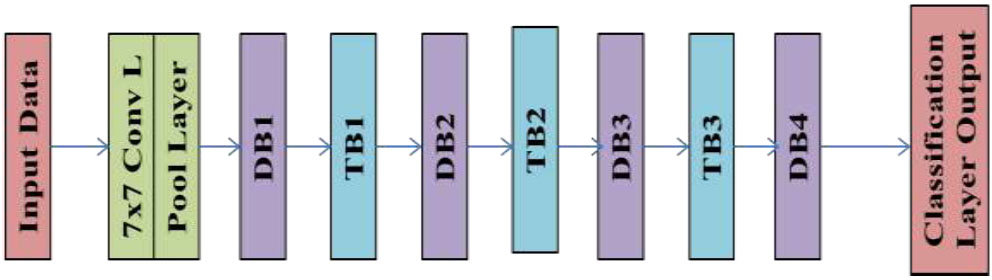

3. PROPOSED MODEL

For the semantic segmentation of plant leaf diseases, “Deep Convolutional Neural Network (DCNN)” based encoder-decoder architecture is employed here. 80% of the dataset is used for testing, while the remaining 20% is used for training. The encoder uses a few filters and pooling operations to extract features from the input image to make pixel-by-pixel predictions. Then, the decoder gradually restores the encoder's low-resolution feature maps to their original, full input resolution. The aim of this study is to create a high-density segmentation map of an image where each pixel is linked to a unique class or kind of object. For modelling, the four semantic segmentation models— LinkNet-34, PSPNet, and SegNet—are fed leaf disease images as input. Three models are compared based on their validation “dice coefficient,” validation mean “Jaccard index,” and training and validation “loss curves.” After examining four models, it can be said that the LinkNet-34 model performs the best when considering all performance metrics. Fig. (6) shows the suggested process for the detection and classification of leaf disease. Downsampling of the input image is done during the encoder step of auto-encoder neural network image segmentation. An encoder-decoder neural network design needs an encoder component to learn the spatial and semantic features of an image. The encoder takes an image as input and reduces the resolution of the image using many convolutional and pooling layers. Reducing the amount of processing load on the decoder is possible by down sampling and extracting the most important visual qualities.

The encoder portion's picture downsampling is the main emphasis of the suggested technique. To ascertain which encoder performs best, a simulation is conducted utilizing three distinct encoders on the LinkNet-34 architecture: DenseNet121, ResNext, and InceptionV3. When compared to PSPNet and SegNet models, the experimental analysis shows that the LinkNet-34 model performs the best for semantic segmentation. Subsequently, the optimal LinkNet-34 model is subjected to three distinct encoder applications: DenseNet121, ResNext, and InceptionV3 of these, DenseNet121 has demonstrated the highest encoder performance. The findings support the proposal of the LinkNet-34 model with the DenseNet121 encoder for lesion segmentation from plant disease images. Finally, using Adam optimizers, the recommended model's parameters are changed to successfully lower the loss function. This is an important step in the training process because the model's performance and training speed can be significantly impacted by the optimizer selection and its hyper-parameters.

DenseNet model representation.

Proposed methodology for plant leaf lesion detection and classification.

4. RESULTS

Here, a semantic segmentation model based on an intelligent LinkNet-34 model with a DenseNet121 encoder is provided. The performance of two other models— SegNet and PSPNet—is compared with the LinkNet-34 model. Additionally, two different encoders—ResNext and InceptionV3—have been compared to see how well the DenseNet121 performs as an encoder in the LinkNet-34 model. After that, the suggested method is improved by employing the Adam optimizer.

4.1. Evaluation Metrics

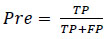

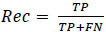

The result of plant leaf lesion detection is evaluated based on different evaluation metrics, namely, precision, recall, f1-score, accuracy, Jaccard, and dice coefficient. The ratio of accurately detected targets to all detected targets by the model is represented by the precision “Pre.” Eq. (2) provides the precision rate calculation formula. Within the formula, “TP” indicates that the forecast is accurate regarding leaf disease, whereas “FP” indicates that the prediction is inaccurate regarding plant leaf lesions [29].

|

(2) |

Recall “Rec” is a percentage that represents the percentage of all targets that the model accurately anticipated [30]. Eq. (3) provides the recall rate calculation formula. “FN” denotes an inaccurate detection by the model of leaf lesions, which is the target.

|

(3) |

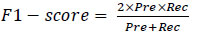

The F1 score is an extra metric for classification accuracy that takes recall and precision into account. Since the F1-score is a harmonic mean of the Precision and Recall values, it offers a comprehensive comprehension of both [31]. Eq. (4) indicates that it is maximal when Precision and Recall are equal.

|

(4) |

One parameter used to assess classification models is accuracy. Roughly speaking, accuracy is the percentage of correct predictions our model made. According to formal definitions, accuracy is the proportion of accurately labelled images to all samples [32, 33]. The Eq. (5) shows the mathematical representation of accuracy.

|

(5) |

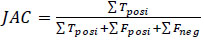

Eq. (6) uses the “Jaccard Index (JAC)” to calculate the intersection spatial overlap divided by the union size of two label sets.

|

(6) |

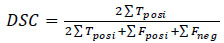

The spatial overlap between two binary pictures is measured by the “Dice Similarity Coefficient (DSC),” whose values range from zero (non-overlapped) to one (perfect overlapped). Eq. (7) yields the segmentation result and the ground truth, or DSC values.

|

(7) |

The model predicts the likelihood that an image will belong to each class and is used to categorize a huge number of generated photos.

4.2. Result Based on Segmentation and Classification

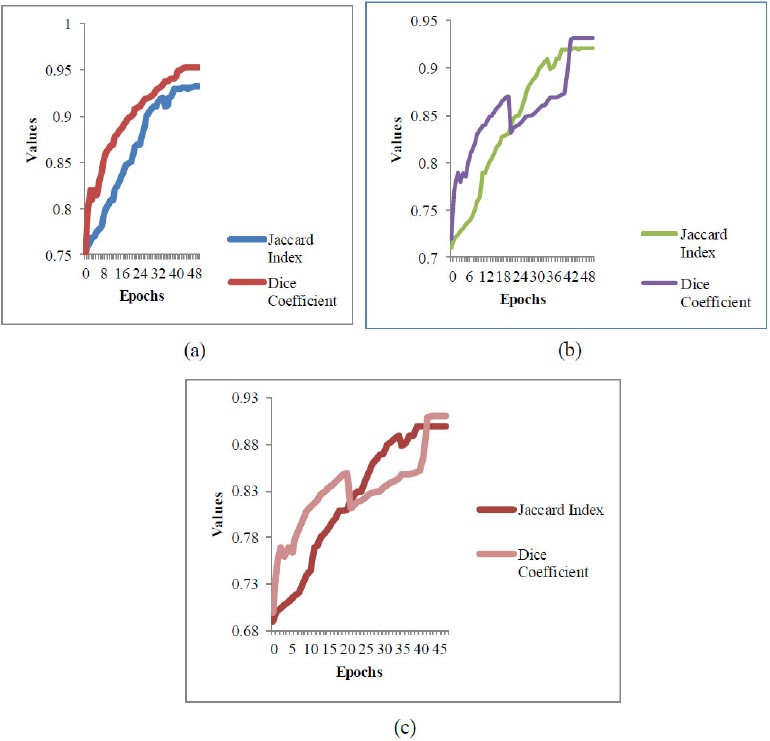

The dataset of 41465 plant leaf diseases has been subjected to the LinkNet-34 model's application. The training and validation curves for the LinkNet-34 model architecture are shown across 50 epochs, as shown in Fig. (5). The validation loss for the 23rd and 40th epochs, respectively, is 0.008 and 0.006. It can be shown that loss diminishes with increasing epochs if this pattern is generalized. The dice coefficient and the Jaccard index have a high association. For every scenario, the model rankings derived from the two criteria are identical. The Jaccard index value reaches up after every 10 epochs. After the 42nd epoch, the dice value with the LinkNet-34 segmentation model reaches a maximum of 95% and turns constant till the 50th epoch.

Graphical representation of segmentation values.

As shown in Fig. (7) the LinkNet-34 segments the lesion objects with dice segmentation of 95.2% and a Jaccard index of 93.2%. Fig. (5a) shows the segmentation of the LinkNet-34 model, (b) shows the value of PSPNet and (c) shows the segmentation value of the SegNet model. The dice coefficient value drops down after the 20th epoch and then scales up with a value of 93% and continues with a value of 93.2% after the 44th epoch. Concerning this dice value, the Jaccard index shows a maximum value of 92.1%. The SegNet segmentation model does not detect the lesions of plant leaves with better detection parameters. It shows a dice coefficient of 941.1% with a Jaccard of 90%. From the above three segmentation models, the LinkNet-34 detects the lesions better as compared with the other two models.

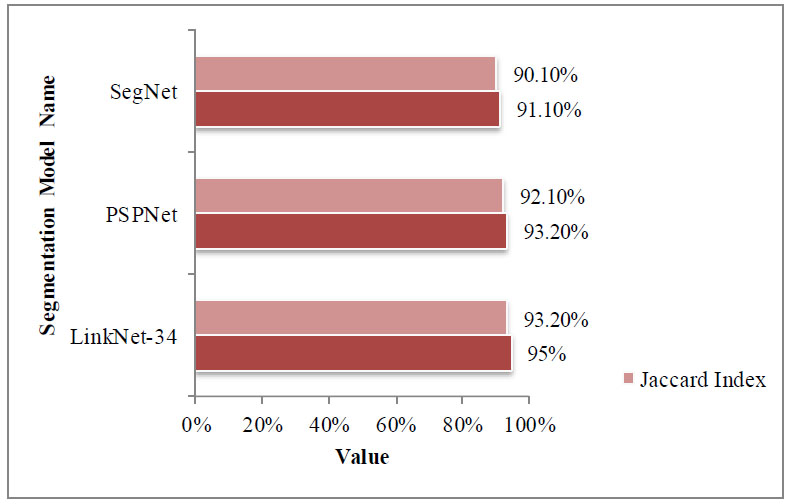

Comparison of segmentation models.

From Fig. (8), it is visible that the LinkNet-34 detects the plant leaf lesions with a maximum dice coefficient of 95%, and SegNet detects it with a value of 91.10%. As shown in Fig. (9) the LinkeNet-34 detects the lesions with better accuracy in comparison with other segmentation models. The LinkNet-34 correctly identifies the lesion objects as other models detect lesions with healthy objects.

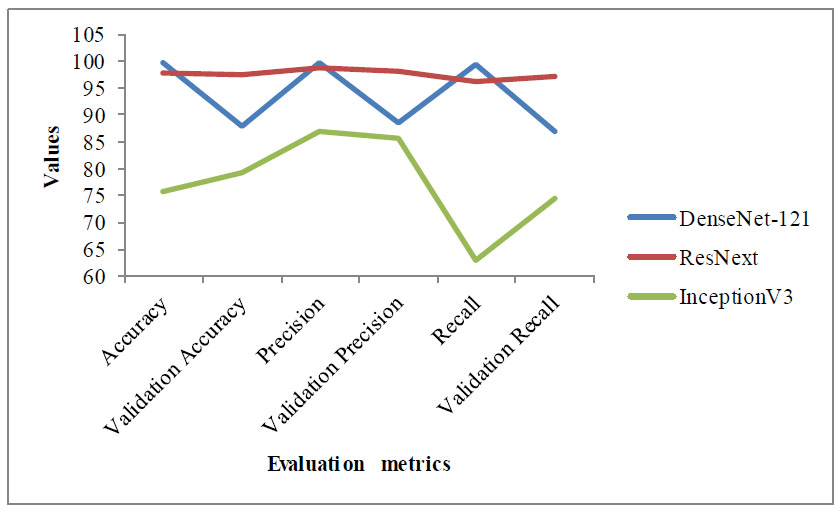

Lesion detection using DenseNet-121, ResNext, and InceptionV3.

As shown in Fig. (9), DenseNet121 detects lesions with better accuracy in comparison with the other two classification models. Table 1 shows the result in terms of different evaluation metrics using different classifiers.

The DenseNet-121 detects and classifies the objects as healthy and unhealthy with a validation accuracy of 97.57% which is far better in comparison with other models. The existing CNN model ResNet has taken less time compared to other models. Table 1 shows the evaluation result of all the models with different evaluation metrics such as accuracy, validation accuracy, precision, validation precision, recall, and loss. The graphical representation of the evaluation result is shown in Fig. (10).

The LinkNet-34 segments the lesion objects with better results in comparison with SegNet and PSPNet. Then, the proposed model combines the LinkNet-34 with DenseNet-121, ResNext, and InceptionV3. From these proposed models, the LinkeNet-34 and DenseNet-121 models show better detection and classification results as compared with other models. The loss with DenseNet-121 is 0.33 which is the smallest among all the models. It detects the lesion part with better accuracy results.

| - | ResNet | DenseNet-121 | InceptionV3 |

|---|---|---|---|

| Accuracy | 99.60% | 97.81% | 75.67% |

| Validation Accuracy | 87.88% | 97.57% | 79.37% |

| Precision | 98.60% | 98.91% | 86.82% |

| Validation Precision | 88.54% | 97.98% | 85.82% |

| Recall | 99.57% | 96.21% | 63.30% |

| Validation Recall | 86.98% | 97.29% | 74.57% |

| Loss | 0.01387934 | 0.346543908 | 1.169343233 |

| Validation Loss | 0.432095468 | 0.330930889 | 1.055114508 |

| Time Efficiency | 1140.18s | 1960.02s | 2101.76s |

Graphical representation of different models.

| Study | Model Name | Dataset Name | Accuracy |

|---|---|---|---|

| [30] | Proposed CNN | Wheat Leaf images | 96% |

| [31] | Modified ResNet50 | Wheat leaf images | 98.44% |

| [32] | Optimized Capsule Neural Network | Tomato Leaf images | 96.39% |

| [34] | Harnessing Deep Learning (DL) | Rice leaf images | 99.94% |

| [35] | TrioConvTomatoNet, a novel deep convolutional neural network architecture | Tomato leaf images | 99.39% |

| [36] | Different CNN models | Tomato leaf images | 99.43% |

| [37] | Proposed CNN | Potato leaf images | 98.28% |

| - | Our Proposed Model | Plant Leaf | 97.57% |

5. DISCUSSION

The comparison and performance of different models are studied and compared with our proposed model. The different datasets are used for comparison using different models. Some authors suggest the proposed CNN model [28] and modified ResNe50 [29] model using wheat leaf images, which show an accuracy of 96% and 98.44% respectively. Table 2 shows the comparison with our proposed model. The lesion objects are detected using three different segmentation models such as LinkNet-34, PSPNet, and SegNet. The LinkNet-34 detects the lesion with a better dice coefficient value of 95% and a Jaccard index of 93.2%. Then, the LinkNet-34 fuses with other classification models such as DenseNet-121, ResNet, and InceptionV3. The DenseNet-121 detects the lesion with an accuracy of 97.57%, which is better than other models.

Based on the above table, it is obvious that using wheat leaf images, the proposed models detect disease with an accuracy of 98.44% and 96%. The optimized Capsule Neural Network detects it with an accuracy of 96.39%. Our proposed fused model LinkNet-34 with three different classifiers, DenseNet-121, ResNet, and InceptionV3, detects the best accuracy with only DenseNet-121, which shows 97.57%. Dilated convolution layers are located at the heart of the network and are constructed using the LinkNet design. The compute and memory efficiency of Linknet architecture is high. Dilation convolution is an effective technique that can increase feature point receptive fields without lowering feature map resolution.

CONCLUSION

This study suggests a model that consists of two transfer learning phases. Moreover, to identify and categorize lesion items, the segmentation model and classifier are connected. In addition to 36 different plant leaf diseases, it can categorize the entire instance. The first results showed a significant improvement over the present crop disease classification systems, with 97.57% classification accuracy. Even though our approaches, which combine a fusion model and transfer learning, may seem more difficult to use and less accurate than other approaches now in use. Increased model epochs and training inputs will improve the accuracy of the suggested system. In addition, the structure provides early identification, fast processing, reduced parameters, and fewer epochs. Furthermore, to find lesions, a total of 50 epochs were examined. These advantages allow this structure to function in a concurrent environment. The proposed model can be implemented in any crop disease for identification and detection. Furthermore, drones are used to capture images of crops or woodlands below the surface to create an accurate and reasonably priced dataset. Furthermore, because of its faster processing speed, the suggested system is a great choice for drone installation to provide real-time crop disease detection.

AUTHOR’S CONTRIBUTION

It is hereby acknowledged that all authors have accepted responsibility for the manuscript's content and consented to its submission. They have meticulously reviewed all results and unanimously approved the final version of the manuscript.